Algorithms roll out. Acronyms multiply. And marketers? Still pursuing the same thing — search visibility.

Lately, even the language of search feels unstable. SEO became AEO. Then GEO. As a user joked on Tim Soulo’s LinkedIn post about this, maybe it’s time to just call it “LMNOP.”

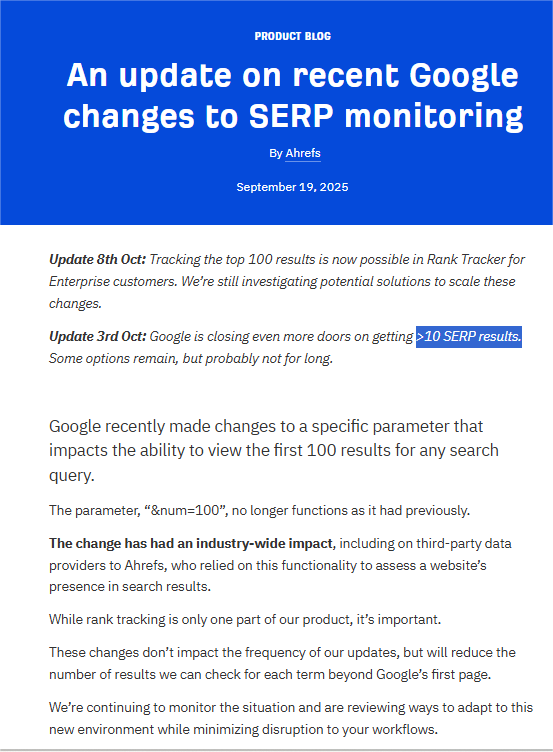

SEO professionals and marketers aren’t laughing, though — especially after Google’s hush-hush removal of the num=100 parameter on September 14, 2025. This Google search results update reportedly dented keyword visibility for nearly 77% of sites.

In plain English, it means SEO experts and rank-tracking tools could see all 100 results in one go, but not anymore. Now it takes 10 separate requests instead of one for 100 results. Big deal? Absolutely.

The Google n=100 SERP update fundamentally changes how rank-tracking tools, analytics platforms, and visibility reports collect data.

In this blog, we’ll break down exactly what the n=100 SERP was, why Google made the change, how it’s affecting Google Search Console (GSC) and other SEO tools, and what SEO professionals and organizations should do to adapt.

What is (was) the n=100 SERP?

I read it somewhere: lead forms are like eyebrows — you only notice them when they’re either perfect or a disaster. I think it was Ann Handley. Anyways, the n = 100 SERP worked the same way.

Most of the time, it ran quietly in the background, barely noticed. It powered the visibility data we take for granted in tools like GSC, Ahrefs, SEMrush, and pretty much every rank tracker out there.

A single request gave you 100 search results in a go, which may have seemed like a menial job, but it was essential for SEO projects.

Remove it — as Google did on September 14, 2025 — and you realize the value of that invisible helping hand. In a big way.

So, what’s the n=100 SERP?

The n=100 SERP (also called &num=100) was a hidden Google search parameter. With the help of it, users and SEO tools can Google 100 results per page instead of the default 10.

It mattered because?

For anyone doing serious keyword research, competitor analysis, or large-scale SEO, being able to see 100 results in one shot was not just a huge time saver, but also gave a broader view of keyword performance.

To get 100 search results per page , all you had to do was to—

Add &num=100 to a Google search URL.

As in:

https://www.google.com/search?q=example&num=100

With the elimination of the parameter, GSC and other analytics tools have to execute 10 separate SERP requests to retrieve the same depth of data.

Which means more workload and partial ranking data. Because digging through SERP results would be 10X more expensive and less efficient for low-ranking keywords.

In simple terms:

Before → One page showed 100 results (fast, cheap, complete).

Now → You see 10 results at a time (slower, costlier for SEO tools, less transparent).

Why has Google benched the n=100 SERP

Google hasn’t really cleared the air on this one. When asked, a Google spokesperson told Search Engine Land that “the use of this URL parameter is not something we formally support.” Which, in Google-speak, sounds like: “Yeah, it was never official. And no, we’re not bringing it back.”

But why?

Officially, no reason has been confirmed.

But based on what we know — and what the data community has pieced together — there are a few likely explanations, or should we say, theories:

1. Stopping mass scraping and bot abuse

For years, both SEO tools and AI platforms leaned on &num=100 to grab massive chunks of SERP data at once.

- Removing that option throttles bulk scraping and slows down bots.

- It eases server load and preserves resources for real users.

- It enforces Google’s long‑standing terms of service against automated data extraction.

In short, it’s Google tightening the tap on anyone trying to copy its results at scale.

2. Fighting AI data harvesting

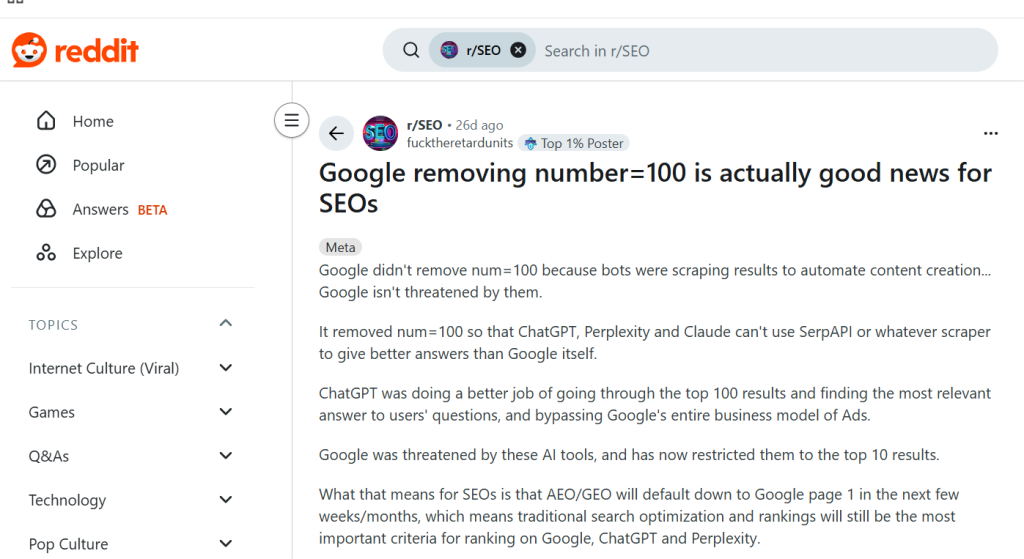

There’s a growing conjecture that this update was about more than rank‑tracking tools. It was about AI.

- Generative models like ChatGPT, Perplexity, and Claude previously used scraped SERP data to train models and churn out search answers.

- Those systems could scan 100 results and serve insights faster than Google itself.

- By limiting access, Google keeps valuable SERP intelligence—and ad revenue—inside its own ecosystem.

As one Redditor put it: “Google didn’t remove n=100 because of bots—it removed it so AI tools stop getting smarter off its data.”

3. To clean up performance data

There’s a theory that inflated impression counts in GSC were partly caused by bots hitting search results en masse.

If true, removing num=100 helps Google deliver more accurate, human-centric visibility data. It’s possible some of the “visibility drops” SEOs are seeing now aren’t actual ranking losses — just bot impressions being filtered out.

4. Protecting infrastructure and user experience

Even if you ignore the AI search angle, Google’s servers benefit.

- Scraping hundreds of results per query adds massive strain.

- Fewer results per page means less bandwidth and faster responses for human users.

- It also helps improve site stability, particularly with the increased AI crawling expected in 2025.

5. Steering SEOs toward Google’s own tools

Another theory floating around: Google’s strategic nudge.

- Reducing n=100 forces marketers to rely more heavily on GSC for position data.

- Some SEOs even speculate that Google could unveil its own advanced, paid rank‑tracking features soon.

- It’s classic ecosystem control—limiting external dependency while promoting first‑party analytics solutions. Whether that’s cynical or smart business depends on your side of the table.

So, what’s the truth?

Probably a blend of all of the above. There’s no definitive answer yet, but it’s safe to assume this Google search result update reflects Google’s broader shift:

- Defend infrastructure.

- Guard data from AI tools.

- Maintain competitive advantage.

- Keep the search experience human‑centric and ad‑friendly.

How the n=100 SERP update is impacting GSC, Ahrefs, and other SEO tools

It would be an understatement to say that the death of the &num=100 parameter frustrates data nerds. It reshapes how visibility data appears in GSC, Ahrefs, SEMrush, AccuRanker, and practically every rank tracker that has ever existed on the planet.

This is what the Google n=100 SERP update might do to your visibility data collection:

1. Data gaps and errors in rank tracking

The removal of the &num=100 parameter disrupted platforms that depended on loading 100 SERP results at once.

Rank-tracking tools suffered:

- Missing SERP screenshot data.

- Sudden drops in keyword ranking sensors.

- Error messages and incomplete rank reporting.

These disruptions went beyond tech glitches and affected SEO professionals’ ability to reliably measure and report visibility.

2. Drop in impressions and keyword visibility

Around 87.7% of websites observed drops in GSC impressions. About 77.6% lost visibility for mid- and short-tail keywords.

- This was largely due to the elimination of inflated impressions caused by bots scraping 100 results per page, resulting in cleaner, more accurate data.

- Average positions appeared to improve, but this reflects the reduction of bot-generated impressions rather than actual ranking gains.

3. Long-tail keyword tracking takes a hit

Fetching deeper SERP layers now costs more. Therefore, tools are spotlighting short-tail and mid-tail queries.

- Long-tail data, often refreshed less frequently, might appear incomplete or delayed.

- This limits keyword discovery. Especially true for those who rely on niche, low-volume opportunities.

4. Rising costs for SEO tools

Without &num=100, fetching 100 results requires making 10 separate requests. Unsurprisingly, server costs, proxy usage, and API expenses increase.

- Smaller SEO tools face operational struggles and higher costs. Subscription fee hikes or service limitations could be the potential outcome.

- Larger consolidated providers may dominate as niche players struggle to adapt.

5. Reduced depth and reliability of rank tracking

Many SEO tools are scaling back to tracking fewer positions (Top 10 or Top 20) daily. A hybrid approach is sometimes used, where top results are updated daily, and deeper results less frequently.

- This shift reduces visibility into long-tail keywords and competitor data beyond the first page.

- Expect more volatility and “noise” in ranking dashboards.

6. Challenges in year-over-year and trend analysis

The fundamental change in how impressions and ranking data are collected means:

- Comparing 2025 data to previous years can be misleading due to discontinuities.

- Marketers must flag these disparities in reporting to avoid false conclusions.

What marketers and businesses need to understand

- The drop in GSC impressions does not indicate a worsening of website performance, but rather a recalibration toward real human visibility. That’s by removing bot-inflated impressions.

- SEO reports will initially appear “worse.” But reflects more authentic user behavior.

- Rank tracking beyond page 2 is now less reliable. Adapt reporting expectations accordingly.

- Rising costs for rank tracking might affect budgeting decisions in digital marketing teams.

- Tools like SEMrush, Ahrefs, and Accuranker are actively communicating these changes and working on platform adaptations.

What SEO professionals and brands should do next

Don’t panic. Adapt. Here’s how.

- Reset your baseline. Mark September 10 as the before-and-after for your data. Check your Search Console trends for impressions and average position to reset your baseline.

- Ask your SEO tool providers. Check how they’ve adapted. Be prepared for potential cost increases resulting from the higher volume of requests.

- Adjust reporting and communication.Explain to stakeholders that impression drops and ranking shifts may be due to this change, not performance. Shift focus to business outcomes like conversions and revenue, more than just rankings or impressions.

- Audit your rank tracking setup. Understand if your tools limit tracking depth or lower update frequency. This helps avoid surprises in client reporting and budget planning.

- Model your GSC data. Use statistical modeling to fill gaps in impression data or smooth fluctuations during this transition for more consistent trend analysis.

- Diversify marketing efforts. Given ranking volatility, use complementary channels like display ads and content marketing to stabilize traffic.

- Value quality and user experience. With Google pushing AI-powered results, prioritize content relevance, site health, and user signals for long-term SEO success.

The road ahead

The Google n=100 SERP update is changing how SEO professionals track rankings and measure visibility. How has it impacted your SEO strategy? Have you adapted your workflows or found new ways to maneuver this shift for better content and insights?

Share your thoughts in the comments or contact us — let’s discuss actionable strategies to thrive in this new era of search.

Urja Patel - Content Writer

Urja Patel is a content writer at Mavlers who's been writing content professionally for five years. She's an Aquarius with an analyzer's brain and a dreamer's heart. She has this quirky reflex for fixing formatting mid-draft. When she's not crafting content, she's trying to read a book while her son narrates his own action movie beside her.

Measuring what matters: Track these customer journey metrics to drive smarter decisions

How to create dynamic, smarter animations in Webflow using GSAP and AI