Let’s start with a confession; I used to hate web scraping.

There, I said it.

If you’ve ever burnt the midnight oil trying to fix a broken scraper because a website changed one tiny div class, you know that particular flavor of frustration.

You finally get your script to pull clean data, feel like a genius, go to sleep… and the next morning, the site redesign nukes your code.

It’s like spending hours building a sandcastle, only for the tide to roll in.

But something’s shifted. The way we collect and process web data has quietly entered a new era, one where AI bots for web scraping can do in minutes what used to take us days.

We’re transitioning from hand-coded scripts to AI-powered web scraping, and honestly, it’s redefining how developers, marketers, and businesses approach data altogether.

Let’s unpack this transformation, as we step away from the old “code everything yourself” grind to the shiny, automated, no-code AI scrapers that are redefining what’s possible.

Why does everyone want to scrape the web

So, the internet is basically a giant treasure chest of information, only it’s locked inside a billion different boxes.

E-commerce stores want competitor pricing, travel startups need destination data, researchers want reviews, and marketers want leads.

Everyone needs data. And web scraping is how we open those boxes.

The challenge lies in the fact that the web isn’t built for easy access. Each website is its own messy, ever-changing jungle of HTML, JavaScript, and hidden APIs. You either fight through it manually (which is slow and soul-sucking) or you automate it (which used to be fragile).

That used to be the norm until AI came along.

Reliving the old days ~ When scraping meant code, coffee, and chaos

Before AI got involved, web scraping meant code and caffeine.

1. Building in-house scrapers

You’d hand-craft your scraper from scratch, maybe in Python with BeautifulSoup or Scrapy.

The upside?

You got full control. The data stayed on your servers, allowing you to tailor the logic exactly to your business, and you felt like a hacker genius watching the console spit out clean JSON.

The downside?

Every new website was a new headache. Every layout change meant rewrites. And maintaining your own scraping infrastructure was like owning a vintage car, kinda romantic until it broke down.

Still, for many devs, building in-house meant security, flexibility, and long-term reliability, at least until scale hit.

2. Renting someone else’s engine: Third-party services

Then came third-party web scraping APIs.

You no longer had to write your own browser automation or dodge CAPTCHAs. Simply make an API call, and you’ll receive structured data back, often in JSON format.

This web scraping automation saved tons of time. It was fast, efficient, and predictable.

But… there was a catch.

Relying on someone else’s infrastructure meant less customization, higher costs, and dependency on a vendor’s uptime and policies. You were still a passenger in someone else’s car.

Ringing in the AI era ~ When scrapers get smart!

As AI waltzed into the dev life, everything changed.

Instead of telling your scraper exactly what to do, you could now show it examples and let it figure out the pattern. You may think of it like hiring a junior developer who learns as they go, but without the pizza bills.

Let’s look at how AI in data scraping actually works in practice.

AI as your coding copilot

Large Language Models (LLMs) like ChatGPT, Gemini, or Claude can now write scraping logic for you.

You can literally say:

“Hey, write a Python scraper that extracts all product names and prices from this site.”

And it’ll generate working code using libraries like Requests, BeautifulSoup, or Playwright.

This makes AI-powered web scraping accessible to even non-developers. Marketers, analysts, and founders, among others, can now spin up a scraper without requiring deep technical knowledge.

Sure, it’s still an iterative process where you might need to refine, tweak, or debug, but what used to take weeks can now happen before your coffee gets cold.

AI as a parsing engine

Here’s where it gets fun.

Sometimes, you don’t want to scrape an entire page; you just want to extract specific pieces. A few product reviews, a specific section, or a dynamically generated snippet.

In the past, you’d hunt down messy XPaths or regexes.

Now, you can literally feed the AI website scraper a sample of HTML and say,

“Extract the review text and rating from this structure.”

The AI figures it out, much like a human reading the page visually, and pulls just what you need.

This is a lifesaver for sites that constantly tweak their layouts or use inconsistent structures. Traditional scrapers break while AI scrapers adapt.

The only real downside is the cost of the API. Each intelligent parse can be expensive at scale. But if your business depends on accuracy, that’s a fair trade.

But what about images? Yep, AI handles those too!

Have you ever encountered a website that conceals valuable data within images, such as product specifications, tables, or screenshots? Traditional scrapers hit a wall there.

Well, AI doesn’t.

Using Optical Character Recognition (OCR) fused with machine learning, you can now extract text straight from images, even when it’s embedded or stylized.

These AI data extraction tools are getting shockingly accurate. They can read faint fonts, handle different languages, and clean up noisy visuals.

However, the catch is that blurry or low-contrast images can still stump them. But overall, the gap between “can’t read that” and “done in seconds” has nearly vanished.

The DOM still matters (But AI makes it easier)

Let’s not throw the old tools away just yet.

Parsing the HTML or DOM structure is still crucial for precision. Developers still rely on libraries like BeautifulSoup, Jsoup, or HTML Agility Pack to retrieve exactly the elements they want.

What’s changed is how AI simplifies the process.

Instead of painstakingly inspecting HTML trees, you can ask an AI bot for web scraping to locate, describe, and extract relevant elements automatically.

In short, you get the control of traditional scrapers without the pain of manual parsing. That’s automated web scraping with AI done right.

When manual still makes sense

All that being said, not everything needs to be automated.

Suppose you’re gathering a handful of data points for a one-off project; sometimes, manual navigation, browsing, copying, or screenshotting is fine.

But for anything beyond that? You’re wasting hours.

With no-code AI scrapers, even non-technical teams can now automate this grunt work with point-and-click interfaces. So yeah, manual scraping is officially the typewriter of the web data world, nostalgic, but inefficient.

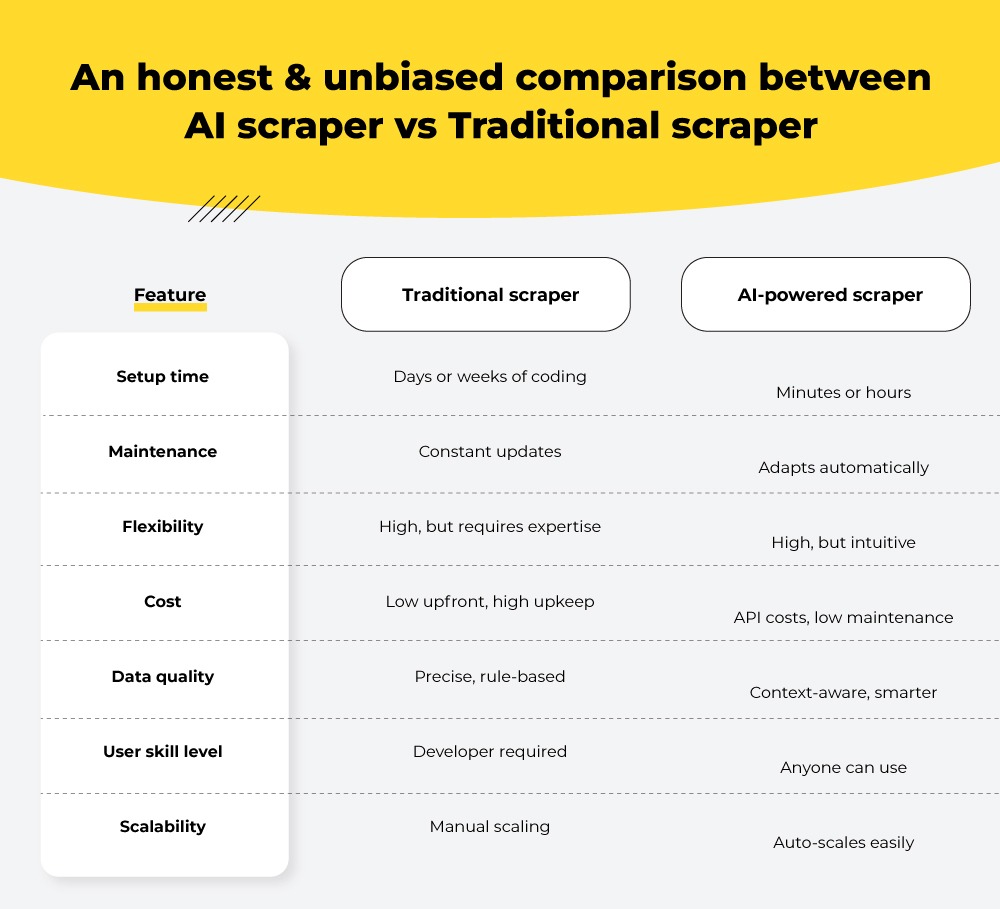

AI scraper vs Traditional scraper ~ The showdown

Let’s now get down to comparing how the two really stack up to each other.

So, while traditional scrapers are great for structured, repetitive jobs, AI scrapers are great for unstructured, dynamic, or unpredictable ones.

When used together, they create something magical, a hybrid scraping ecosystem that’s powerful, flexible, and accessible.

An insight into how businesses are using AI web scraping

AI isn’t just for show; on the contrary, it’s delivering serious business value.

Use case 1: E-commerce intelligence

Let’s say you’re launching a new Shopify store. You need to populate product data such as names, prices, specs from competitor sites.

Instead of spending days copying or coding, you feed the URLs to an AI scraper. It pulls everything cleanly, and even structures it in a ready-to-import format.

Use case 2: Information aggregation

Running a news, finance, or travel portal? AI scrapers continuously pull updates from multiple sources and refresh your database daily, no human babysitting required.

Use case 3: Research & insights

Marketers and analysts use AI data scraping to detect trends, track sentiment, and even analyze pricing fluctuations in real time.

Use case 4: Building scraping-as-a-service tools

Some startups are going meta, using AI to build tools that scrape for others. They create client-specific scraping workflows powered by pre-trained models.

Data, once a cost center, becomes a product.

Kadoa ~ A case in point

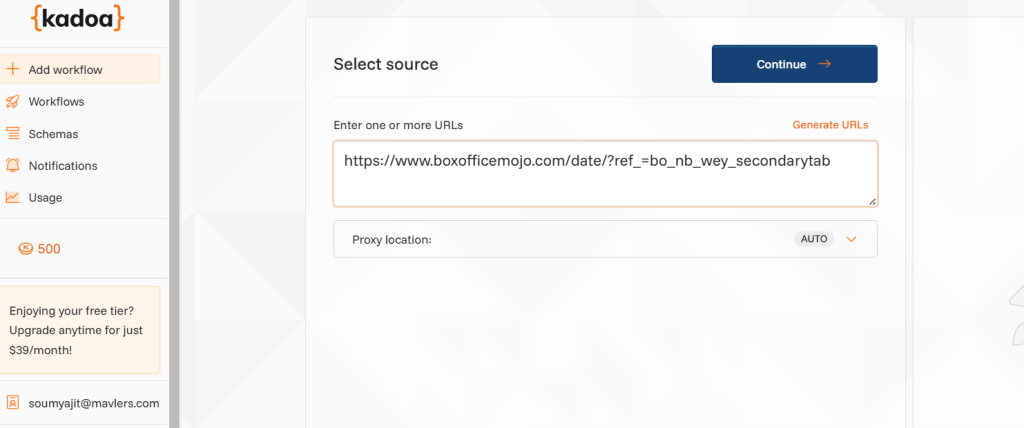

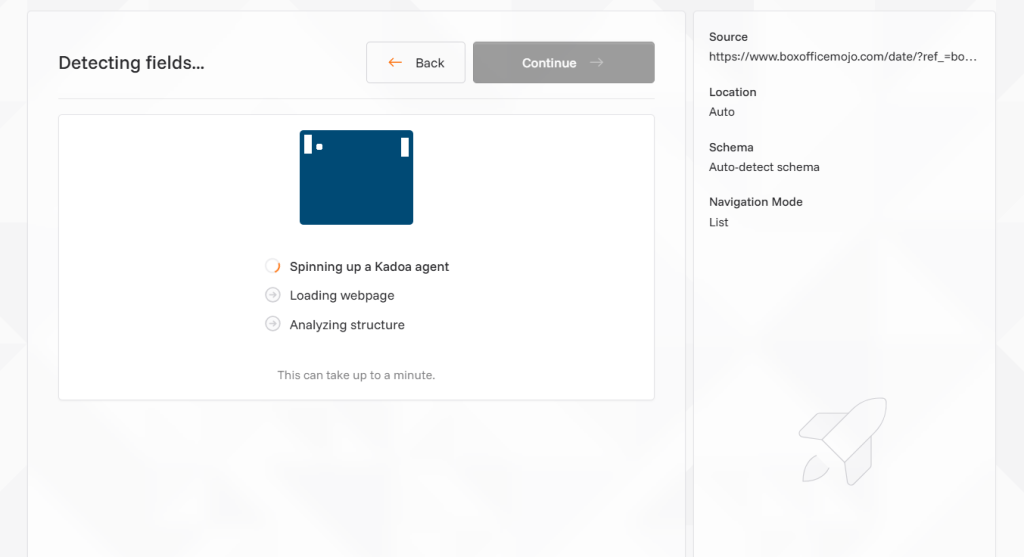

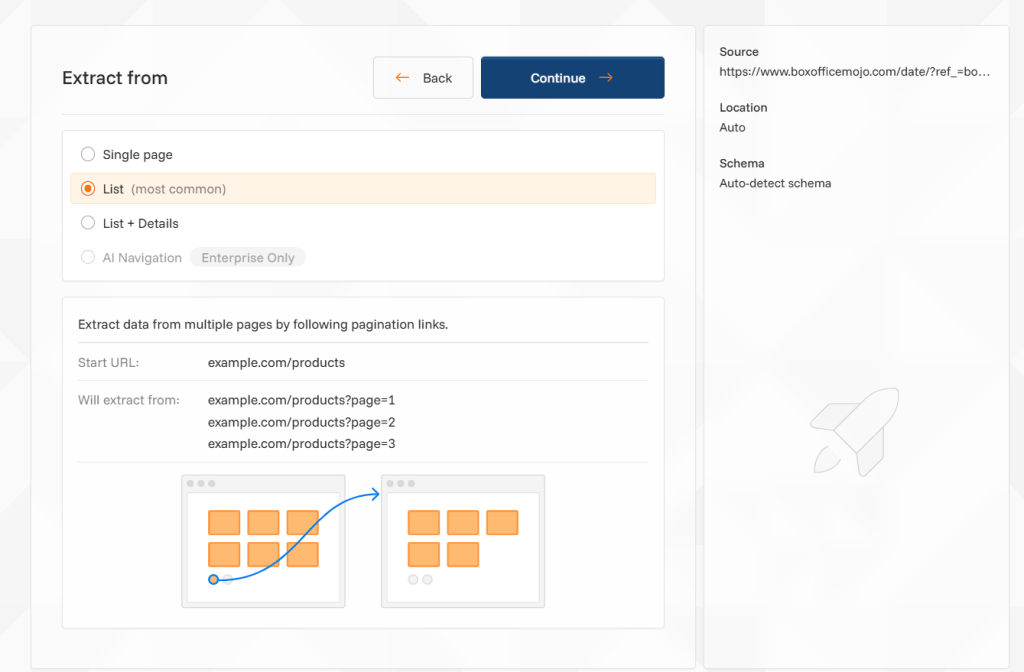

If you’d like a real-world example of how AI-powered web scraping is reshaping data collection, look no further than Kadoa, an AI website scraper built to handle data extraction across domains like finance, healthcare, e-commerce, and entertainment.

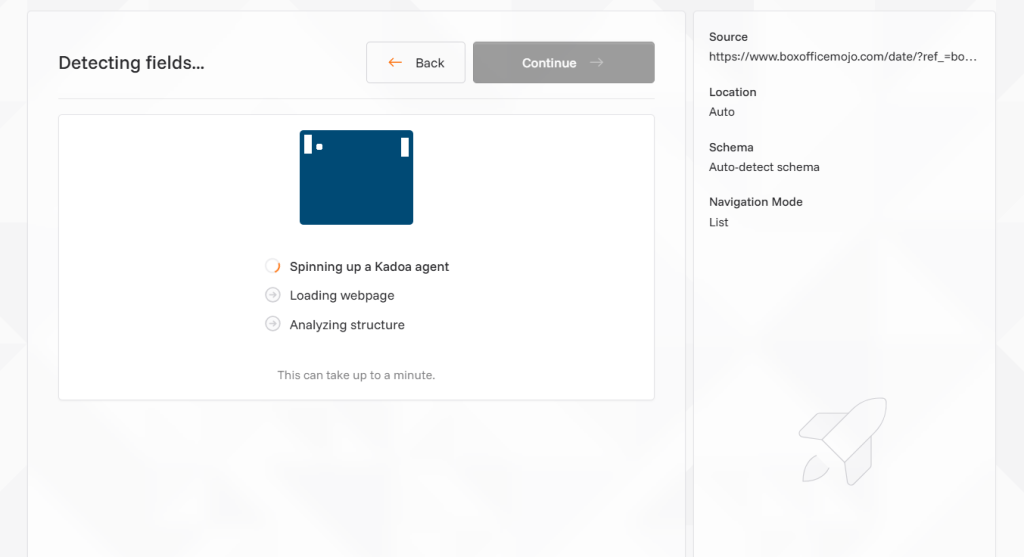

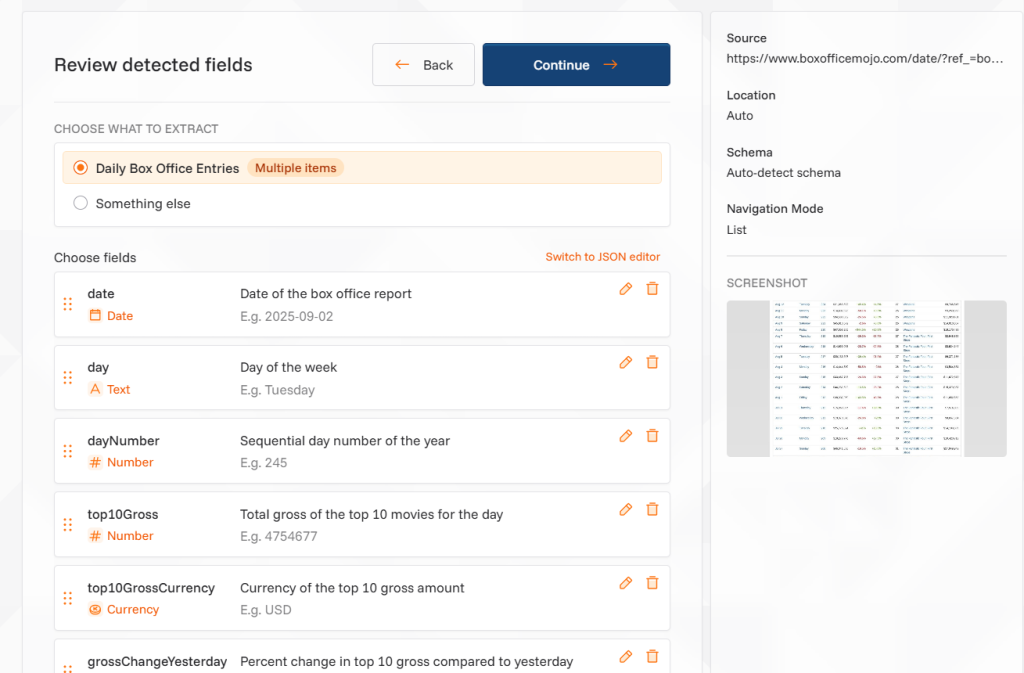

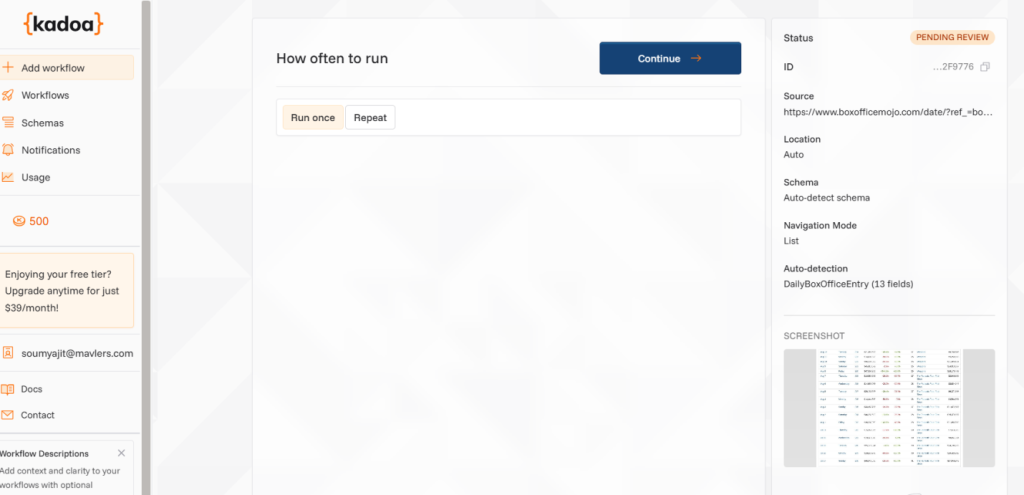

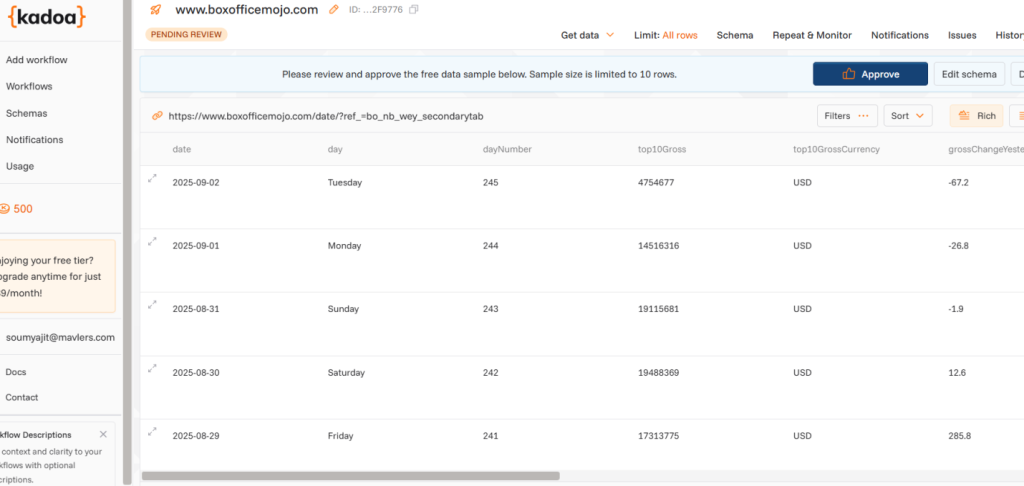

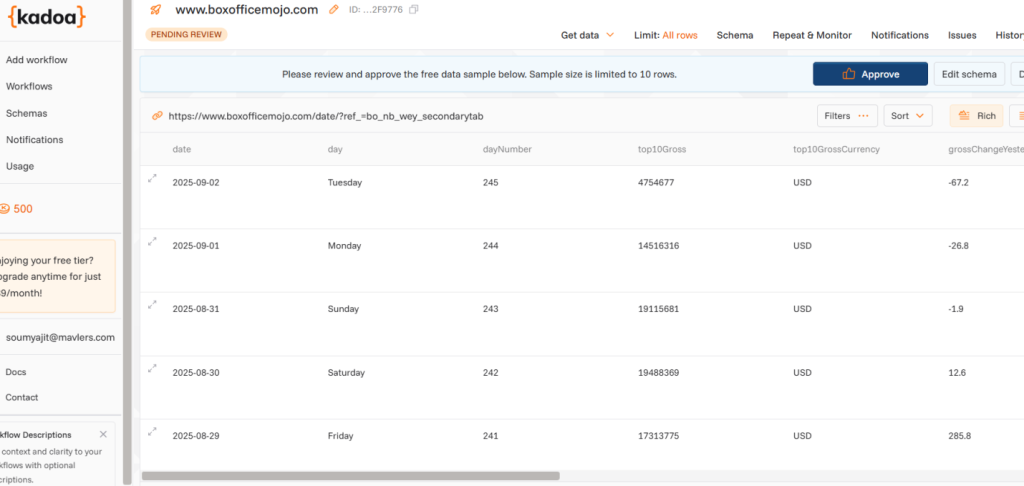

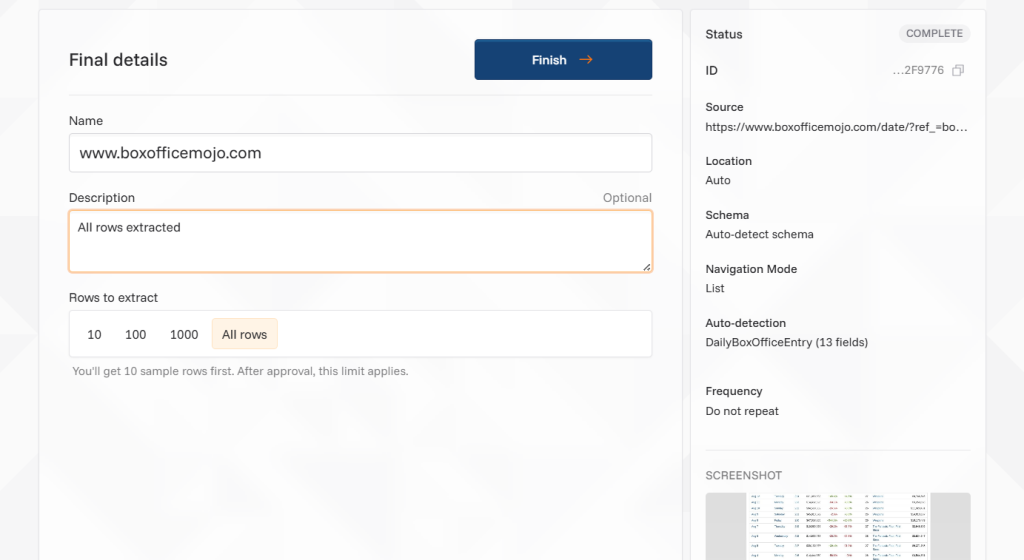

Here’s how a typical Kadoa workflow works (let’s use boxofficemojo.com as an example):

- Add a workflow – Input the URL(s) of the website(s) you want to scrape.

- Select extraction depth – Decide how deep the scraper should crawl (specific sections, categories, or the entire site).

- Choose fields to extract – Define what data you want such as titles, prices, links, or descriptions.

- Set a schedule – Automate extraction to run daily, weekly, or on demand.

- Enable notifications – Get alerts when data extraction completes.

- Download structured data – Access clean, ready-to-use JSON or CSV outputs for further processing.

This is what no-code AI scrapers like Kadoa do best, transforming a once tedious, code-heavy process into a streamlined, intelligent workflow accessible to both developers and business users.

Wrapping up

Honestly, we’re moving from “extract and store” to “extract, understand, and act.”

For developers, this means less grunt work and more creativity, while for marketers, it means faster insights.

And for business owners, it means the freedom to make data work for them, finally, not the other way around.

Soumyajit Das - Subject Matter Expert (SME)

Soumyajit is a tech enthusiast, problem-solver, and currently a Senior Project Coordinator at Mavlers. He conceptualizes, supervises, and delivers solutions to clients, blending tech know-how with sharp business insights to build something faster, smarter, and more scalable. When not working or writing about the next big thing in tech, you’ll find him reading, exploring new ideas online, or binge-watching his favorite shows.

Naina Sandhir - Content Writer

A content writer at Mavlers, Naina pens quirky, inimitable, and damn relatable content after an in-depth and critical dissection of the topic in question. When not hiking across the Himalayas, she can be found buried in a book with spectacles dangling off her nose!

The ultimate guide to Webflow component variants for designers and marketers

Inbound recruitment: Traditional ATS vs HubSpot for staffing agencies